MotorMark Dataset

Features

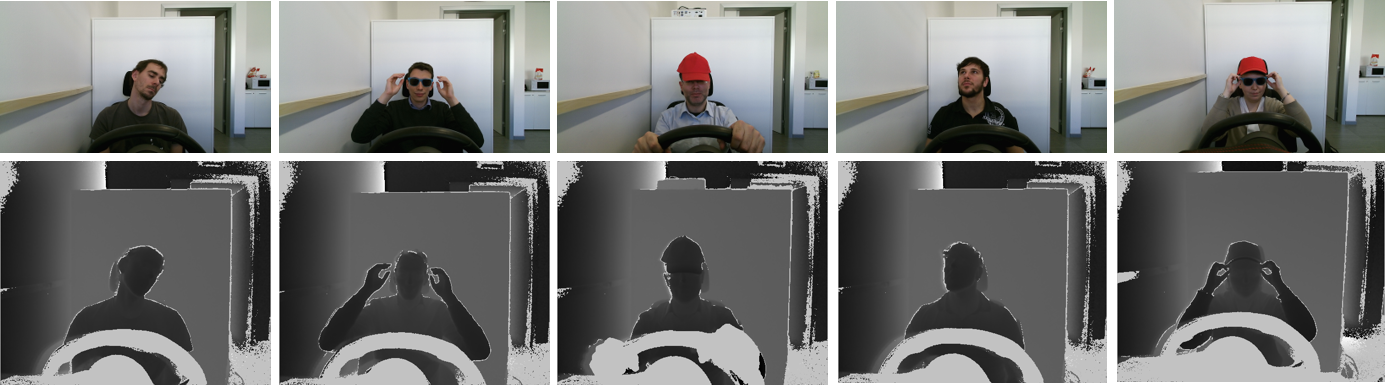

- Deep oriented: is composed by more than 30k frames. A variety of subjects is guarantee (35 subjects in total);

- Automotive context: we recreate an automotive context. The subject is standing in a real car dashboard and performs real inside-car actions, like rotating steering wheel, shifting gears and so on;

- Variety: subjects are asked to follow a constrain path (4 led are placed in correspondence with the speedometer, the rev counter, infotainment system and the left wing mirror), to rotate their head in fixed position or to freely move their head. Besides, subjects can wear glasses, sun glasses and a scarf, to generate partial face and landmark occlusions;

- Landmark annotations: the annotation of 68 landmark positions on both RGB and depth frames is available, following the ISO MPEG-4 standard. The ground truth has been manually generated. The user was provided with an initial estimation done by means of the algorithm included in the dLib libraries (www.dlib.net), which gives landmark positions on RGB images. The projection of the landmark coordinates on the depth images is carried out exploiting the internal calibration tool of the Microsoft Kinect SDK.

- High quality: RGB and depth images are acquired with a spatial resolution of 1280x720 HD and 515x424, respectively;

Download

To download the dataset, we require an email address where we'll send a download link to. This will help us to keep in touch in case errors are found or as updates become available.

Here you can find a readme file for the Motormark dataset

We believe in open research and we are happy if you find this data useful. If you use it, please cite our work.

@inproceedings{borghi17landmark,

title = {Fast and Accurate Facial Landmark Localization in Depth Images for In-car Applications},

author = {Frigieri, Elia and Borghi, Guido and Vezzani, Roberto and Cucchiara, Rita},

booktitle = {Proceedings of the 19th International Conference on Image Analysis and Processing (ICIAP)},

year = {2017}

}

Acknowledgments

We sincerely thank all the people who participated in the experiments that led to the creation of this dataset.