Vision for Augmented Experience

The project VAEX, "Vision for Augmented Experiences", has the goal to develop new ways of enjoyment of cultural experiences in smart cities, allowing the user to recognize targets in video from smartphones and wearable cameras, create and share personalized video on social networks and web TV, to enjoy automatic annotation tools and multimedia search on the web.

The research focuses on some new technologies still partly unexplored:

- Egocentric-vision (or ego-vision), new computer vision technique to automatically recognizes what is captured in first-person perspective videos.

- Semantic annotation of content and knowledge extraction from captured video, to achieve recognition of objects, people, concepts and events.

- Augmented and customized video rendering, to create user experience personalized video summaries: in particular video summarization techinques are used to mantain the relevant semantic content from hours of original video, summarizing it in a few minutes, and video personalization techinques aim to enrich the video with additional heterogeneous media data, on the basis of user profile.

The project “Vision for Augmented Experiences” was primarily funded by Fondazione Cassa di Risparmio Modena

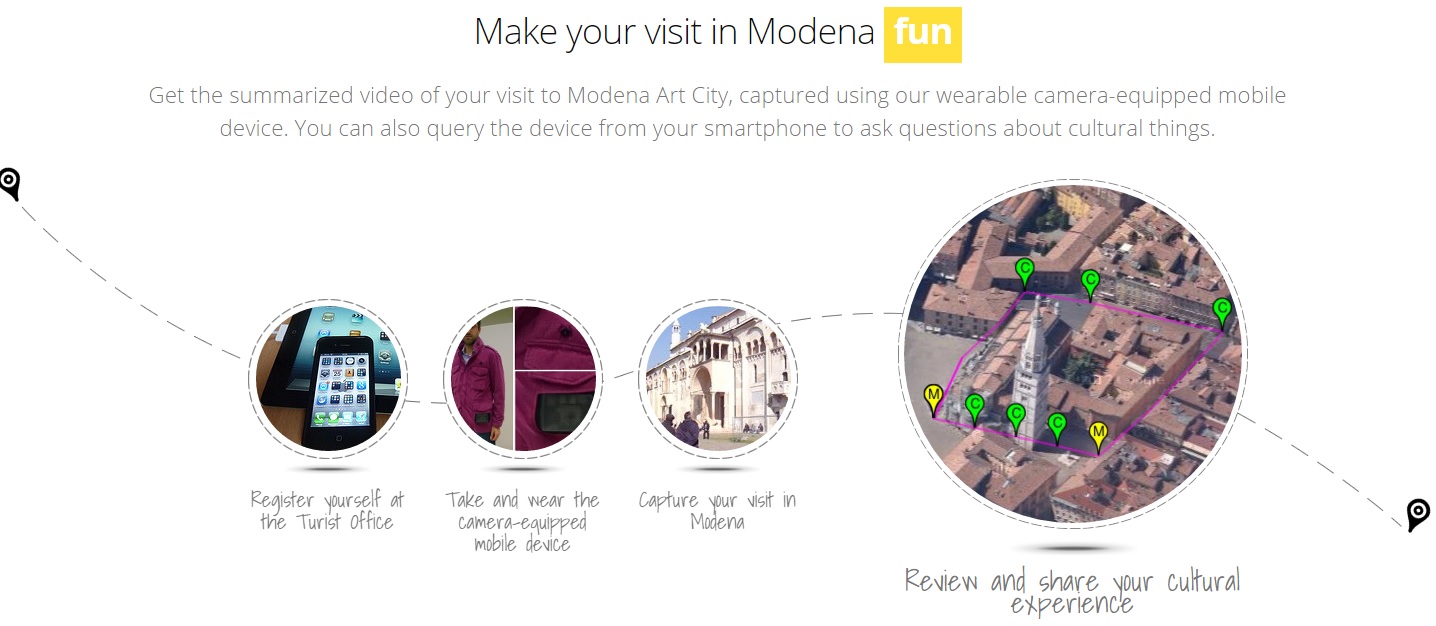

The project "Vision for Augmented Experiences", funded by the "Fondazione Cassa di Risparmio Modena", aims to tackle some open research challenges about cultural experiences in smart cities, for the entire lifecycle of visit capture, from the video acquisition, to its processing, summarization, personalization and social sharing.

The research focuses on some new computer science techniques, still largely unexplored.

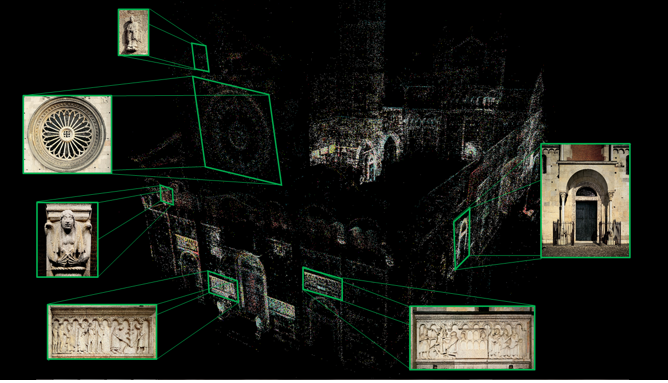

- Egocentric-vision (or ego-vision): new computer vision techniques to automatically recognize what is taken in video captured from a first person perspective. This new research filed is very hard to tackle as egocentric captured videos consist of long streams of data with a ceaseless jumping appearance, frequent changes of observer's focus and lack of hard cuts between scenes, thus requiring new methodologies for automatic analysis and understanding. It s' important to recognize in real time what the user sees and and interact with him to provide information and knowledge and create a personalized experience. New models will be used for recognition of gestures and social behavior of people.

- Semantic annotation of content and knowledge extraction from captured video, to achieve recognition of objects, concepts and visual events through the extraction of low level descriptors as visual features, use of statistical and pattern recognition algorithms in machine learning classification with noisy training data automatically extracted from the web, and the use of audio, visual and textual cross-media descriptors, through artificial intelligence methods to provide knowledge management, such as data mining and topic extraction.

- Augmented and customized video rendering, to create user experience personalized video summaries: in particular video summarization techinques are used to mantain the relevant semantic content from hours of original video, summarizing it in a few minutes, and video personalization techinques aim to enrich the video with additional heterogeneous media data, on the basis of user profile. Recommendation and profiling methods will be integrated with automatic content-based and collaborative-based solutions .

The application of these techniques in the fields of Cultural Heritage is extremely innovative: the idea is to take a cue from social marketing techniques to process and enrich cultural experiences video captured through machine learning advanced solutions in order to achieve effective deepening adaptive solutions . Web and mobile applications are implemented using human-machine interaction technologies to enrich the traditional web and mobile techinques. In particular, interactive applications based on the retrieval of important details on cultural heritage buildings will be provided by implementing the recent research efforts in the field.

A knowledge management platform will be implemented for the storage and exchange of information between the different modules of the project. For this purpose, new solutions and approaches for the management of Big Data will be developed to automatically connect metadata and visual content to the web knowledge repositories.

Publications

| 1 |

Alletto, Stefano; Abati, Davide; Serra, Giuseppe; Cucchiara, Rita

"Exploring Architectural Details Through aWearable Egocentric Vision Device"

SENSORS,

vol. 16(2),

pp. 1

-15

,

2016

| DOI: 10.3390/s16020237

Journal

|

Video Demo

Project Info

Staff:

- Rita Cucchiara

- Giuseppe Serra

- Patrizia Varini

- Stefano Alletto

- Marcella Cornia

- Riccardo Gasparini

- Davide Abati