Research on Automotive

Hand Monitoring and Gesture Recognition for Human-Car Interaction

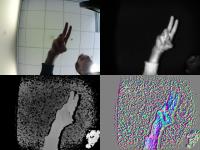

Gesture-based human-computer interaction is a well-assessed field of the computer vision research. Our studies are focused on gesture recognition in the automotive field.

Our main goal is the development of a dynamic hand gesture-based system for the human-vehicle interaction able to reduce the driver distraction.

To recognize a gesture, it is necessary to firstly detect the hand in a given image. Then, the performed gesture is analyzed and a gesture class is predicted, allowing a smooth communication between the driver and the car.

Video synthesis from Intensity and Event Frames

Event Cameras, neuromorphic devices that naturally respond to brightness changes, have multiple advantages with respect to traditional cameras. The difficulty of applying traditional computer vision algorithms on event data, such as Semantic Segmentation and Object Detection, limits their usability.

Therefore, we investigate the use of a deep learning-based architecture that combines an initial grayscale frame and a series of event data to estimate the following intensity frames. We evaluate the proposed approach on a public automotive dataset, providing a fair comparison with a state-of-the-art approach.

Learning to Generate Faces from RGB and Depth data

We investigate the Face Generation task, inspired by the Privileged Information approach, in which the main idea is to add knowledge at training time -- the generated faces -- in order to improve the performance of the presented systems at testing time.

Our main research questions are:

- Is it possible to generate gray-level face images from the corresponding depth ones?

- Is it possible to generate depth face maps from the corresponding gray-level ones?

Experimental results confirm the effectiveness of this research investigation.

Mercury: a framework for Driver Monitoring and Human Car Interaction

The deep learning-based models and frameworks investigated at AImageLab have been exploited to implement a framework to monitor the driver attention level and to allow the interaction between the driver and the car through the Natural User Interfaces paradigm. The driver attention is a concept that is difficult to define in a univocal way and, also, it has different nuances: for this reason, we focus on the concept of attention understood as the driver's level of fatigue, computed through the perclos measure, and the driver fine and coarse gaze estimation.

Face Verification with Depth Images

The computer vision community has broadly addressed the face recognition problem in both the RGB and the depth domain.

Traditionally, this problem is categorized into two tasks:

- Face Identification: comparison of an unknown subject’s face with a set of faces (one-to-many)

- Face Verification: comparison of two faces in order to determine whether they belong to the same person or not (one-to-one).

The majority of existing face recognition algorithms is based on the processing of RGB images, while only a minority of methods investigates the use of other image types, like depth maps or thermal images. Recent works employ very deep convolutional networks for the embedding of face images in a d-dimensional hyperspace. Unfortunately, these very deep architectures used for face recognition tasks typically rely upon very large scale datasets which only contain RGB or intensity images, such as Labeled Faces in the Wild (LFW), YouTube Faces Database (YTF) and MS-Celeb-1M.

The main goal of this work is to present a framework, namely JanusNet, that tackles the face verification task analysing depth images only.

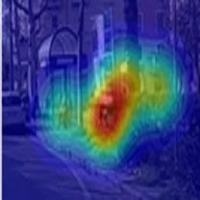

Dr(eye)ve a Dataset for Attention-Based Tasks with Applications to Autonomous Driving

Autonomous and assisted driving are undoubtedly hot topics in computer vision. However, the driving task is extremely complex and a deep understanding of drivers’ behavior is still lacking. Several researchers are now investigating the attention mechanism in order to define computational models for detecting salient and interesting objects in the scene.

Nevertheless, most of these models only refer to bottom up visual saliency and are focused on still images. Instead, during the driving experience the temporal nature and peculiarity of the task influence the attention mechanisms, leading to the conclusion that real life driving.

Driver Attention through Head Localization and Pose Estimation

Automatic recognition of the driver's attention level is a problem not yet solved in research.

This project investigates new non-invasive systems for real-time monitoring of the state of attention of drivers and aims at developing a low-cost multi-sensory system that can be installed on circulating vehicles. Computer vision and machine learning techniques as well as multi-physical technologies will be explored.

Landmark Localization in Depth Images

A correct and reliable localization of facial landmarks enables several applications in many fields, ranging from Human Computer Interaction to video surveillance.

For instance, it can provide a valuable input to monitor the driver physical state and attention level in automotive context. In this paper, we tackle the problem of facial landmark localization through a deep approach. The developed system is fast and, in particular, is more reliable than state of the art competitors specially in presence of light changes and poor illumination, thanks to the use of depth input images. We also collected and shared a new realistic dataset inside a car, called MotorMark, to train and test the system. In addition, we exploited the public Eurecom Kinect Face Dataset for the evaluation phase, achieving promising results both in terms of accuracy and computational speed.

Learning to Map Vehicles into Bird's Eye View

Awareness of the road scene is an essential component for both autonomous vehicles and Advances Driver Assistance Systems and its relevance is growing both in academic research fields and in car companies.

This paper presents a way to learn a semantic-aware transformation which maps detections from a dashboard camera view onto a broader bird's eye occupancy map of the scene. To this end, a huge synthetic dataset featuring couples of frames taken from both dashboard and bird's eye view in driving scenarios is collected: more than 1 million examples are automatically annotated. A deep-network is then trained to warp detections from the first to the second view. We demonstrate the effectiveness of our model against several baselines and observe that is able to generalize on real-world data despite having been trained solely on synthetic data.