Back to ImageLab research fields

Research on Continual Learning

General Continual Learning

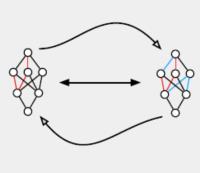

Neural networks are known to suffer from the infamous issue of Catastrophic Inference when their training data shifts in distribution. Continual Learning (CL) is a branch of Machine Learning that aims at training systems that overcome this problem. As the majority of the proposed evaluation settings for CL fails at encompassing the properties of a practical scenario, we strive to address General Continual Learning (GCL), a setting in which both domain and class distributions shift either gradually or suddenly.